Ha – My friend Charles hit the trifecta this week – Boing Boing, Gizmodo and the Digg front page! Congrats!

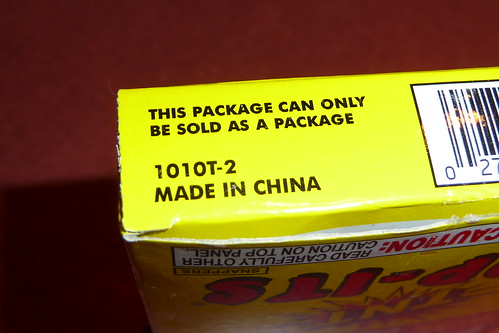

i also bought it as a package.

i also bought it as a package., originally uploaded by ih8gates.

Tired Templates

I recently helped judge a competition for the best law firm Web site, sponsored by North Carolina Lawyers Weekly. In looking over the several dozen sites that entered the competition, it was hard to miss the fact that a large portion of the sites were created by one firm — a vendor that specializes in building sites for law firms. The sites created by this vendor were really similar. If I were in the market for a lawyer and decided to do my research online (and who wouldn’t?), I’d have a hard time discerning any difference between competitors.

My point isn’t that templated sites are bad, or that employing this type of vendor is a bad thing. The lesson here is that if you work with any vendor that’s building a site for you, and they offer some sort of canned template service, don’t use it. Hire your own designer. Pay a photographer for some custom shots. Stand out.

Happily, there were firms that entered the competition that “got it”. These sites were good sites, but they stood out all the more when contrasted with their template-bound competition.

What is validation?

Validating your site is a good way to see how your site compares against THE SPEC as handed down by the W3C. Validation isn’t the end-all be-all, but a site that validates will likely be “future-proof” and ready for that new buzz-word-approved technology that you’ve never heard of but will become vitally important next week.

Validation essentially makes sure that your site follows the rules. Theoretically, if everyone plays by the rules, then your site should look good in any browser that embraces the rules – even browsers that have yet to be released.

Check your site… Pick a page, the homepage is a good start, and run it through the form at:

http://validator.w3.org/

If you have no errors, then congrats. Your Web developer did you right. If you have just a few errors, then you’re probably fine, too. If you have 578 (give or take) errors, you may want to seek professional help.

"Taking aim at Target"

Target has been under fire recently for accessibility issues with their Web site. The US National Federation of the Blind (NFB) filed suit against them because their site is not accessible.

A fun wrinkle is that Target.com is powered by Amazon.com… Wonder where the accessibility faults lie?

It will be interesting to see what this issue does to raise awareness of site accessibility/508 compliancy and CSS in general. Many of the problems cited by the NFB are basic and easily avoided.

Continuing coverage on webstandards.org.

Design Choices and User Testing

There was an interesting article on A List Apart recently about how design choices can drastically affect the way that users react to a site’s messaging. They took a page that contained a critical call to action and tested two variations on the design and minor copy tweaks.

The results were really surprising.

One of the designs that appeared to improve readability and usability actually resulted in a 53% drop in measured reaction. Wow!

When we build sites, we make so many decisions based on our past experience, training and hunches… This article suggests that “testing” is an important addition to that list.

“One way or another, it’s important to accept that none of us—neither designers nor writers—know what the “best” page design or copy is until we test”.

The article also mentions the importance of integration of writers within the process. I’d be surprised if many Web builders, designers, architects, etc. spend a lot of time—right from the beginning of the project—with their writers, ensuring a good fit of copy to display.

Good stuff from ALA.

Greasemonkey script to fix Audible's bad UI

Audible.com did a crummy thing with their recent site redesign. They made all links into javascript actions.

This poses various usability issues (right-o – if you’ve turned off javascript, you’re out of luck). The biggest issue for me, though, is that this keeps me from opening links on the site into new tabs/windows. If you experience the Web in a single window, sans tabs, you might not even notice that there’s a problem. When I’m browsing a commerce site, I like to open links to products I may be interested in into a new tab. This lets me keep one tab open to the product list, avoiding excessive back-button usage. It also helps me zip through a product list opening products into new tabs so that I can use the tabs as a sort of “browse queue” of things that looked interesting.

Apparently I wasn’t the only one that found this to be a pain. Paul Roub has created a nice little Greasemonkey script that turns the links into tabable (and permalinkable) links. Nifty.

If you’re unfamiliar with Greasemonkey, it’s a Firefox extension that lets you manipulate the behavior of any site. Here’s a brief tutorial…

Don't go there, friend… of meta refreshes and status codes.

I know I’ve been guilty of this one in the past, but I want you to make this pledge along here with me: “No more abuse of the meta refresh!”

I know — it’s a handy in so many ways. I used it most often as a way to create short URLs for my visitors that would redirect to a page with a longer, more complicated URL. I’d create a directory off of the root of a site and pop an index.html in the directory that would include a meta refresh to send visitors on to another page.

“So, what’s the deal?”

While the meta refresh appears to do the job, it’s not the best method to acheive this result. Two problems spring to mind… Firstly, it mucks up your visitor’s browser history. If they try to hit “back” from the page you redirected to, they’ll quickly find themselves back on the page, since the meta refresh will do it’s thing and redirect them again. The other issue is that most reputable search engines typically ignore meta refreshes. They have long been abused by search engine spammers and the search indexers have adapted.

The answer?

Use the 301 “Moved Permanently” status code. The 301 status code tells browsers and search indexers that the content they are looking for can be found in another location. It’s Google-friendly and preserves your users’ browser history.

But how?

If you’re using Apache, it’s a matter of updating your .htaccess file. I’m a ColdFusion guy, myself and the following code works for me:

<cfheader statuscode="301" statustext="Moved Permanently">

<cfheader name="Location" value="http://www.yoururl.com/here/">